- Data Management

- Connectivity Studio

- Concepts

In general, the more complex your integration setup is, the longer it takes to run the integration. To make performance issues visible, you are advised to not only test with a few records. Also test with the expected number of records to be processed with the message on a daily basis.

Besides the complexity level of your integration, you have several options that you can consider to improve the performance of the integration.

Document

In general, to limit the processing time, on your documents, only include the records and fields that you need for the integration. The more records and fields you have defined in your document, the longer it takes to process the integration.

To improve the performance of data import or export, you can also consider several document header settings. Which settings are available, depends on the document type:

- External file-based

- Process type 'Direct':

If you import or export (big sets of) simple data, use the process type 'Direct. For simple data, the document lines only have a root record. When a message is run, the data is directly mapped. More technically: It only loads the data in the memory of the record table (usually the BisBufferTable). As a result, the import or export of data is faster.

- Read filename:

For external file-based documents, you can import all applicable files in the Source folder in parallel. To import several files in parallel, on the:

- Document header, in the Read file name field, define a range value. For example, use an asterisk (*). Example: Read file name = %1*.%2 and Sample file name = SalesOrder*.xml.

- Message header, set the performance Type to 'Parallel'.

- Text or Microsoft Excel

- Split quantity:

For Text or Microsoft Excel documents, on import, you can split large files with a lot of records. Whether a file must be split is defined on the document header, in the Split quantity field. As a result, the original file is put in the Split folder instead of the Working folder. When the message is run, the original file is split into smaller files based on the split quantity. The smaller split files are put in the Working folder. Combined with the message performance Type set to 'Parallel', the message processes the split files in parallel to improve performance.

- ODBC

- Page size:

For ODBC documents, to improve performance when importing a lot of records, you can use paging. For paging, the records are split over several pages that run these records in batch tasks. On the document header, in the Page size field, enter the number of records to be processed by one batch task. Combined with the message performance Type set to 'Parallel', the pages are processed in parallel to improve performance.

- Internal

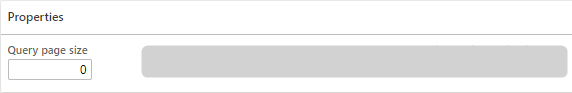

- Query page size:

For internal documents, to improve performance when exporting a lot of records, you can use paging. For paging, the records are split over several pages that run these records in batch tasks. On the document header, in the Query page size field, enter the number of records to be processed by one batch task. Combined with the message performance Type set to 'Parallel', the pages are processed in parallel to improve performance.

Message

In general, to limit the processing time, on your messages, only map the records and fields that you need for the integration. The more records and fields you map in your message, the longer it takes to process the integration.

To improve the performance of data import or export, you can also consider several message header settings:

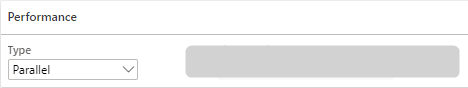

- Performance settings

- Type 'Parallel': You can define how batch tasks are run when a message is processed. If the type is 'Parallel', batch tasks are split over several threads to run in parallel. Parallel processing improves the performance of message processing. Note: Parallel processing is only applied if the message is run in batch.

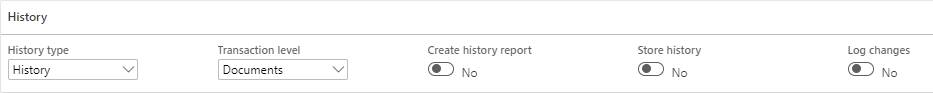

- History settings

- History type 'History':

To improve performance, select 'History' to only store generic message errors in the history for the message. The disadvantage is that you get less details stored in the message history.

- Transaction level 'Document':

Process all data in one transaction instead of processing the data in separate transactions per root record entity of the document. For example, if you process several sales orders in one message run, all sales orders are processed in the same transaction. Note: You are advised to thoroughly consider the number of records to be processed in one transaction. If you process many records in one transaction, and one of these records fail, none of the records is processed. If your transaction level is 'Document' and you process many records in one go, consider using paging or split quantity on the applicable document.

- Create history report 'No':

Do not create a report in Microsoft Excel format that contains the errors that occurred during a message run. The more errors you have, the more time it takes to create a history report.

- Store history 'No':

Only store the records with errors in the history. So, do not store the successfully processed records as this increases the processing time. The less errors you have, the more time it takes to store the history.

- Log changes 'No':

On import, do not log which D365 FO data is changed during import.

Tasks

You can use task dependencies to schedule data import or export in batch. One of the reasons to use task dependencies is to improve the performance of your integration. Tasks that are scheduled at the same level, can be processed in parallel. This improves the performance of the data import or export. Also, the messages, as defined for a task, are run in parallel.

See also